Security research firm Corellium has launched a new Open Security Initiative that will examine the security and privacy of Apple’s new Child Safety measures as one of its first targets.

The company announced the new initiative to celebrate the firm’s fourth birthday stating its new Corellium Open Security Initiative “will support independent public research into the security and privacy of mobile applications and devices through a series of awards and access to the Corellium platform.”

From the post:

Conducting third-party research on mobile devices remains difficult, inefficient, and expensive. Particularly in the iOS ecosystem, testing typically requires a jailbroken physical device. Jailbreaks rely on complex exploits, and they often aren’t reliable or available for the latest device models and OS versions.

At Corellium, we recognize the critical role independent researchers play in promoting the security and privacy of mobile devices. That’s why we’re constantly looking for ways to make third-party research on mobile devices easier and more accessible, and that’s why we’re launching the Corellium Open Security Initiative.

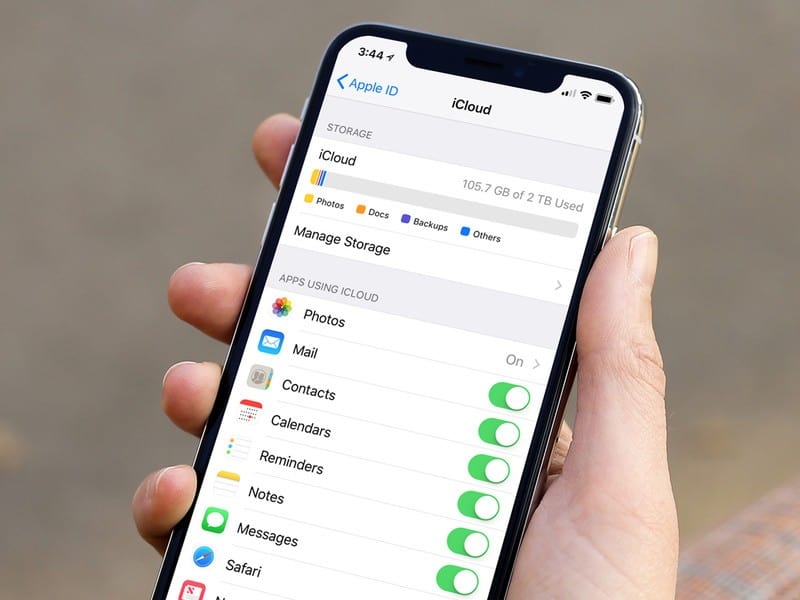

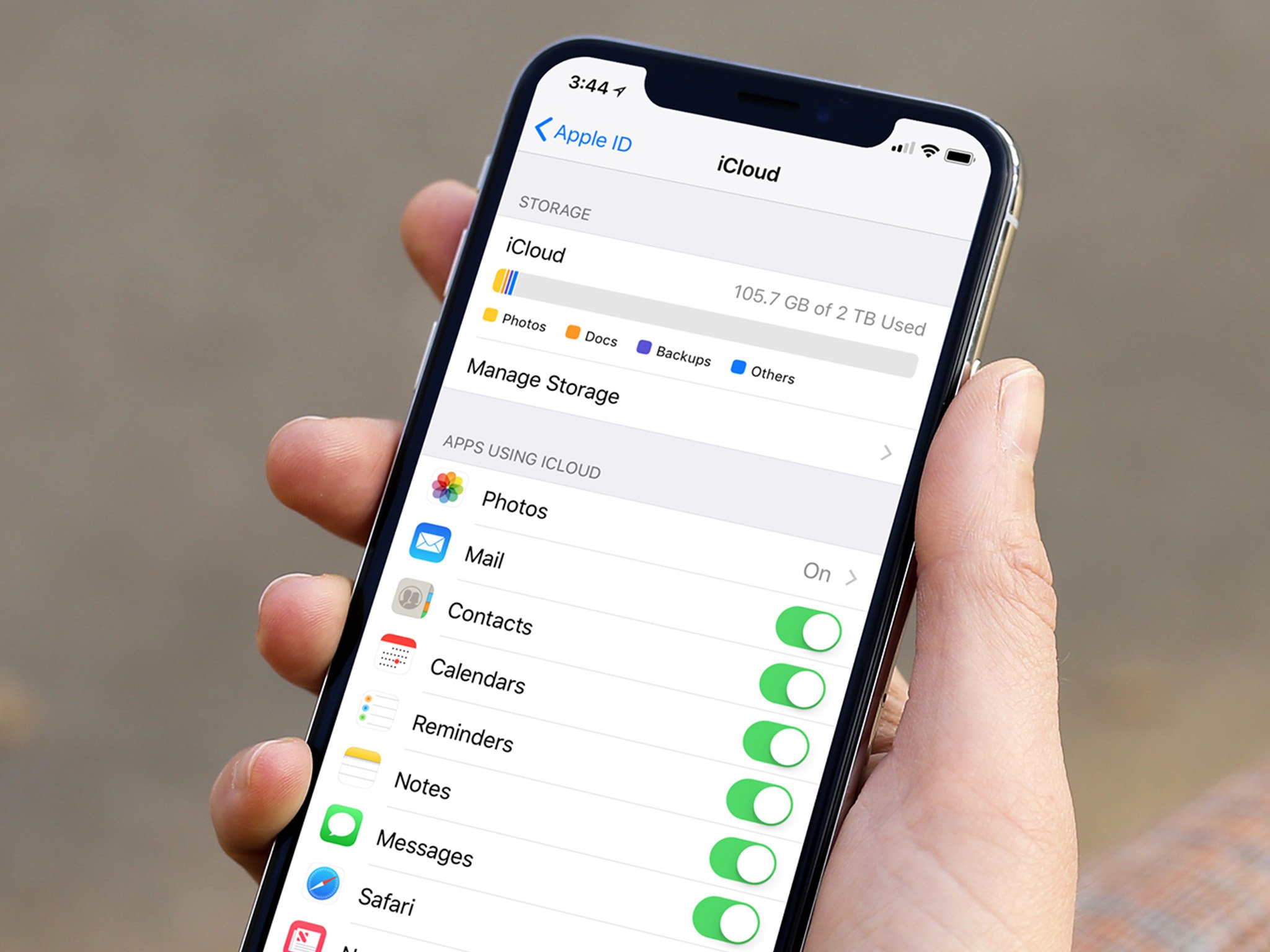

Its first proposed investigation will be examining Apple’s new Child Sexual Abuse Material detection system on iCloud photos:

Setting aside debates on the civil and philosophical implications of this new feature, Apple has made several privacy and security claims about this new system. These claims cover topics as diverse as image hashing technology, modern cryptographic design, code analysis, and the internal mechanics and security design of iOS itself. Errors in any component of this overall design could be used to subvert the system as a whole, and consequently violate iPhone users’ privacy and security expectations.

Corellium notes that Apple “has encouraged the independent security research community to validate and verify its security claims”, Craig Federighi telling the Wall Street Journal last week that changes to the scope of its CSAM detection system could be spotted by security researchers. Corellium said it applauded Apple’s “commitment to holding itself accountable by third-party researchers” and stated its platform was “uniquely capable of supporting researchers in that effort.

You can read the full announcement, including details about Corellium’s initiative, how to enter, and prizes on offer here.

Apple’s recent CSAM announcement has drawn ire from some security and privacy experts who worry the technology is intrusive, or could one day be used by governments to monitor citizens. Corellium’s announcement comes less than one week after it emerged Apple had settled a major lawsuit against the company over the very iOS virtualization software it says can now aid security researchers.